I am using this command pathnames of find files “*.com.ost” of (descendant folders of folder “C:\users”) to find and return the name of that file as a custom filter. When it runs I get some with the correct info. some as none and some with “The expression could not be evaluated: class IllegalFileName” is there something I could do to fix the error?

A file name can’t contain a ‘*’ character so thats the illegal filename.

First, descendants is horrible  don’t use it if you don’t have to.

don’t use it if you don’t have to.

What is the exact relevance you are using?

trying to capture the name of a users ost file located in C:\Users<currentuser>\AppData\Local\Microsoft\Outlook.

So you are trying to do something like:

q: files of folder "C:\"

A: "bootmgr" "" "" "" ""

A: "BOOTSECT.BAK" "" "" "" ""

A: "pagefile.sys" "" "" "" ""

q: (files of folder "C:\") whose (name of it ends with ".sys")

A: "pagefile.sys" "" "" "" ""that is the idea but when i do

q: (files of folder “C:\users”) whose (name of it ends with “.ost”)

A: none

that is why i was using the other line code.

files whose(names of it as lowercase ends with ".ost") of folders "AppData\Local\Microsoft\Outlook" of folders of folders "C:\Users"

Do not use descendants or find files unless you absolutely must, and if you must, you are better off using it only in an analysis property that you set to report once every 12 hours or less often.

Hi jgtew thanks both of you with the one given by jgstew I get a The operator “find files” is not defined error

I don’t understand. I don’t have “find files” in the relevance I posted, so I don’t know how you could get that error. My relevance should work without the need for “find files” if the “.ost” files are always in that folder.

Can you post the exact relevance you used to give you the “find files” is not defined error?

So i am facing a similar issue by leveraging descendants of folder. Do you know how i can find files on all fixed drives without descendants of folder:

example:

((pathname of it, size of it, sha1 of it) of find files “malware.exe” of (descendant folders of folder “” of drives whose (type of it = “DRIVE_FIXED”)))

Now i understand that this is resource intensive; But in case i want to leverage such a solution, how can i avoid illegal filename error

You will likely never see the answer to that query in a regular deployment. That relevance could take hours to complete depending on the size of your drive and number of files and the client will terminate expressions that take too long ( triggered by the next message that comes in from the server )

If the file is a specific distance under a folder set like @jgstew did above that works but the client is not good at scanning the entire drive.

This is the original post from @siddew : 2 parts: Find Files vs Dir /s /a ; How to store "find files" results in a file

The conversation should probably continue there.

Related: Analysis Help to find a file

See related posts:

- 2 parts: Find Files vs Dir /s /a ; How to store "find files" results in a file

- File search return error

- Parsing file.txt and if exist one of value in folder

- Trying to exclude a directory from descendants search

- Relevance issues

- IllegalFileName check (or Continuing on Error)

- Regex to find files with social security numbers

- Find a file in the server \ pc

- Class IllegalFileName or Expression Contained a Character Which is not Allowed

Good point @AlanM. But now i am even more curious. Here is my reasoning.

You are right about relevance getting terminated. For this i had to impose: _BESClient_Resource_InterruptSeconds - I know that everyone recommends not to do so but i want to understand the reasoning… read below.

While doing intensive tests on my own laptop, i found that the Dir command and Find files command both took somewhere between 5-15 seconds on my whole laptop. (500 GB, searched for all PDF/txt/exe files, not just 1 file)

Everyone seems to suggest that running relevance to search for files is a very bad idea. I truly want to understand why? If the drive is indexed and if i know for sure that a dir command doesnt take long, then why is it still a bad idea for a simple exe search on a hard drive? is it just resources?

Is relevance in its nature, a poor function call to execute while doing these types of searches? Even @jgstew mentioned that i should leverage actions rather than analysis(relevance within); Is the reason for this simply the fact that relevance by default gets interrupted if not completed within 60 seconds (default)?

My goal is to truly understand why running a relevance or action to do file searches (targeted files) is a bad idea if i know that it can be accomplished in less than 20 seconds - Am trying to understand if BF client forces too many threads which a direct dir function call will not OR if the client may crash having to support multiple functionality at the same time (updated content/other analysis/regular patches)

I may not get the best way to do a file search, but i just want to dig deeper into BF clients.

I believe the relevance will not use any existing index of the volume to report back all of the files that it contains.

In my experience using the fixlet debugger to search all files on a system, it takes a very long time and always returns the error “The expression could not be evaluated: class IllegalFileName” referenced above.

Oh Yeah!! story of my life; That illegal file name was the whole reason i have started to dislike relevance

that seems like an odd reason to dislike relevance as a whole, but I do agree that it should just skip any files with that problem rather than throwing an error.

I was just kidding abt disliking it, but yeah, it did get on my nerves. Worst part was that i opened a PMR to understand the exact cause of IllelgalFilename(many devices worked and many didn’t) and the only response i got was that it can happen due to many reasons. Are there no logs that we can collect to identify the reasons for these errors?

I was just kidding abt disliking it, but yeah, it did get on my nerves. Worst part was that i opened a PMR to understand the exact cause of IllelgalFilename(many devices worked and many didn’t) and the only response i got was that it can happen due to many reasons. Are there no logs that we can collect to identify the reasons for these errors?

Well the better point is that if you are looking for a plural result, then any errors should be skipped over instead of halting everything.

I’m wondering if this illegal filename is coming from the expression being interrupted.

IllegalFileName should only be thrown if the filename is 0 length or it has one of <,>,:,",/,\,|,* in the name

Just wanted to drop a note about the error:

The expression could not be evaluated: class IllegalFileName

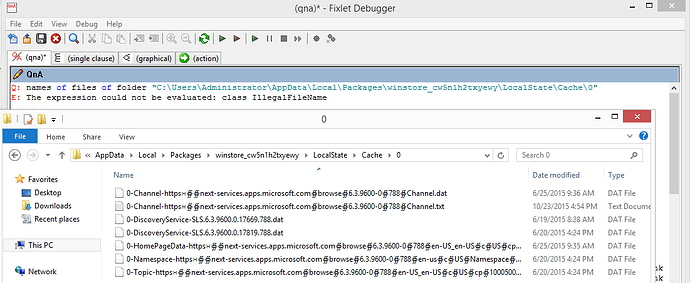

On my system it is occurring when the engine starts looking through the following folder:

“C:\Users\Administrator\AppData\Local\Packages\winstore_cw5n1h2txyewy\LocalState\Cache\0”

Note… this is likely a cache on my system so it is unlikely to be on your system. In this folder there are many files that look like this:

0-Channel-https∺∯∯next-services.apps.microsoft.com∯browse∯6.3.9600-0∯788∯Channel

Explorer seems to accept these characters but relevance encounters an error when going over them.

To add some detail around why using ‘descendant folders’ to search for arbitrary files is not recommended. It’s possible that indexing is helping in your tests, but in general, the timing required to complete such an operation is highly variable and dependent on whether you’re finding any matches. If I mistakingly use find files “.exe” which matches nothing, then I can complete a find across my entire drive in 5-15 seconds. But if I change it to “*.exe” it takes nearly 120 seconds.

Note this is the timing in the Fixlet Debugger which runs at full CPU, while the client runs at 2% CPU most of the time, so 15 seconds is actually 12.5 minutes, and 120 seconds is actually 1hr 40mins! My test system only had about 70GB of data on the drive being scanned, so in reality things could be much much worse.

Additionally consider that you cannot control precisely when this relevance will actually be evaluated. You can limit the frequency, but property evaluation occurs while a client is generating a report and this property will be included if the interval has been reached since the last eval. So at any time when the client is trying to generate a report (e.g. in response to an action, change in fixlet relevance, or heartbeat report) it may need to evaluate this and cause nothing else to occur for somewhere between 10 mins and 2 hrs or more. So clients may randomly go grey or appear not responsive in the console as a result, and if the number of matches/amount of data to scan (and thus the timing) changes over time your operators may not realize/remember this as the likely cause.

We have seen customers run into these exact scenarios and spend a lot of time troubleshooting bad decisions that were made in the past, so we really want to prevent others from falling into this same trap, no matter how tempting it may be to do.