@Aram - what BFI user permissions required for the Service Graph Connector integration. I cannot seem to find any documentation for this at HCL nor at ServiceNow.

This is kind of tucked away on the SNow site - have you seen this?

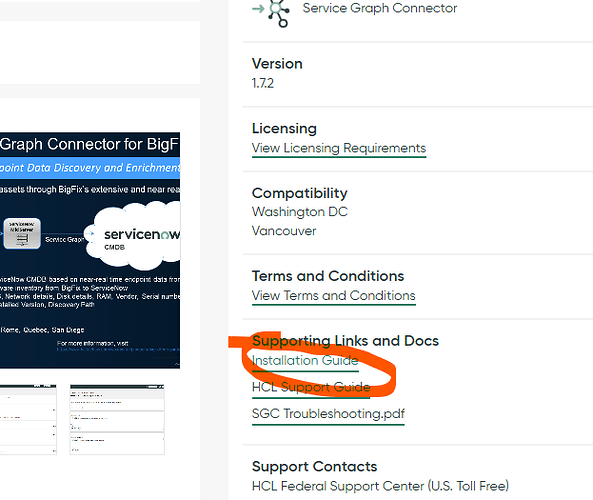

https://store.servicenow.com/appStoreAttachments.do?sys_id=b7a23f861b26de907d31ed7a234bcb49

I have seen this. Unfortunately, it doesn’t specify the minimum permissions required of the BFI user account.

I thought it did. It should just be getting an API token and read-only permissions. I’ll dig around internally later on today if Aram doesn’t answer before I have time to do that.

Thanks @dmccalla. I have another challenge for you and @Aram. By design, the Connector won’t import data for machines that are in any other status but OK. This means I must have credentials and access to the vCenter even though the customer is not using BFI for the IBM Subcapacity calculations. They just want to import the BFI comptuter data into ServiceNow.

Is there a setting or some feature I’ve overlooked that would allow me to tell BFI that I don’t care about the hardware capacity of the VM host, so it will not complain about “No VM Manager Data”?

I haven’t found any docs about the BFI permissions but I think creating a unique BFI user to tie to the Graph Connector ETL that has a token and read access would be enough.

As for the hardware inventory - I couldn’t find any way to bypass that. Its a hard requirement to have hardware data. Someone (@Aram ) correct me if I’m wrong but that data is needed to (initially) match computers in SNow with computers in BFI.

Thanks Duncan. My goal is to be able to import all of the computers from BFI into ServiceNow. However, the filter used by the ETL is designed to exclude computers that have a status of “No VM Manager Data”. I don’t understand the rationale for this. What I’m looking for is a workaround or configuration that will tell the ETL to include all computers, regardless of their status in BFI.

Edit:

The Auditor role will suffice for data collection.

When I say hardware data… the VM Manager data is (supposed to be) a analogue to that. You do bring up an interesting point though. I’m not sure exactly why we would care about cpu cores or subcap scan information in this case unless the Graph connector data relies on that for completeness. I’m not really a BFI expert so getting a little out of my depth here. I’m going to have to defer to someone else on this one. Sorry I couldn’t be of more help. @ssakunala do you have any insights on this?

I’ve been looking at the design of the API call for the ETL, and it includes this as a criteria:

["computer_hardware.status","=","1"]

If I remove it, then all the computers are retreived. The issue, I think, is with the serial numbers provided for computers that have no VM manager data. They all have the prefix TLM_VM_ which may make serial number matching difficult.

@ssakunala Could you chime in on this? I would like to have a solution available that doesn’t involve setting up a VM Manager connection, except as a last resort.

@itsmpro92 If they are using the BFI for IBM sub capacity reporting, then their reports will not be accurate with no VM Manager Data as the tool uses the highest PVU value per core for computers with no VM Manager data. As for the Computers status, if they are designated as cloud computers, the status gets set as “OK”. This will be one option. There will be a default PVU value for computers designated as Cloud devices. The PVU value can be corrected to some other value from BFI UI, assuming they need accurate subcapacity reporting. But please run this by their IBM Compliance team to make sure they are aware/ok with this approach

Boyd, we are not much further in the implementation than you (still very first phase of implementation and getting to grasps what is “in scope” and what is not) but the way I was explained is that the connector does build relationships between CI and other objects/CI data types, so without having the host-to-VM data it is missing vital piece for that relationships. In my honest, opinion that should be configurable setting - if you don’t want relationship then VM Manager data is not required & not brought in; if you do, then you have to have it. Luckily for us, we do aim to have VM Managers data in (just need to catch-up on new hosts provisioned/old decom’ed) and should be pretty close 100%, so not a problem but certainly can understand why for some it may be e a problem.

The bit I have a problem with most so far is the “in-scope” data, which is equivalent to “Software Classification” but not “Package Data”. A ton of instances where entire software is just a “package” and you do have to look through both to find it; not to mention that ServiceNow themselves expect “package data” in (we do have ServiceNow Discovery and we can see what they discover as “software installation” which is essentially exactly the equivalent to “package data”, so it makes no sense the design-level limitation here, especially since BFI does now have the Unified Software API which would have been perfect to use here! At least if there was a setting allowing the user to specify which data they want in…

Thanks @ssakunala for your response, however they are not using IBM software. They specifically chose this approach to provide software discovery data to ServiceNow. Otherwise, they wouldn’t have bothered with BFI at all. So, perhaps this is an edge case to you, but from your (HCL’s) marketing, this appears to be something customers would expect. The VM Managers are not in scope for this implementation, so we have a disconnect for the VMs running on-prem. The majority of the servers are in Azure, so we don’t have any problems there.

Thanks Angel for pitching in. This is the kind of information I will need to manage the customer’s expectations. One benefit of using the BigFix Agent is that it doesn’t require a privileged Domain account to collect the data.

I’m particularly interested to hear about the scope of data in the import. Does the ServiceNow side of the ETL allow any modification to the REST API calls that are made? For instance, can it be adjusted to remove the hardware status filter? Or to adjust the software data being requested?

We haven’t gotten to that stage yet, so not 100% sure but based on my understanding so far I do not think so. One thing that was suggested by Support that should be doable is to change fields mapping (what field from BFI should be matched to what field in ServiceNow) but haven’t been able to find where/how to do it. The screenshots in the set-up/configuration document pertain to older version of ServiceNow and do not match what I am seeing in the interface, so don’t see the option. I did find a quite clear default mapping problem already.

I’ll share what I learn as we delve into this with my customer.

@itsmpro92 As there is no IBM reporting dependency, designating the computers as Azure cloud computers should set them with “OK” status for the SG Connector import: Identifying computers on public clouds

Hi @ssakunala - thanks for the solution. I just had to wait for the Public Cloud analysis to catch up before running the BFI Import.

What version of ServiceNow are you using for the integration?

We are running Washington DC.