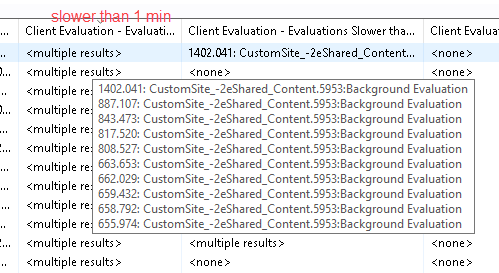

Thanks for that quick turnaround, I loaded the analysis and I am seeing the data, I see the columns you mentioned and it’s showing data, for example slower than 1 min:

Now it’s what to do with it? ![]()

And another question regarding your other Client Responsiveness post, you say to enable Client polling on both clients and relays because in the beginning of the paragraph you mention clients and later you mention:

If so do I set the same time polling internal I used on my clients of 300 seconds (15 mins) and this would be for main server and both internal and dmz relay, correct?

Thanks!