Hi,

Myself and the team are currently in the process of conducting a POC with BigFix within our business and have come across a standstill as we’re having some agent communication issues (particularly UDP).

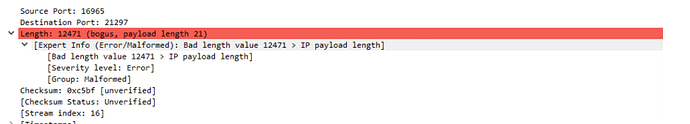

The send refresh command or any tasks from the server are not reaching the BES Client via UDP and upon investigating via Wireshark, we’ve found this to be a bad UDP payload length:

This occurs immediately after receiving the initial UDP with port 52311 as destination and there is no further communication between the client and server.

During various testing, we’ve identified that this could be due to MTU fragmentation, however adjusting this did not bear any fruit. We’ve later decided to try and test whether communication is affected by an internal security product thus installed Windows from a vanilla ISO onto a virtual machine, added it to domain and pushed BES Client to it and - it worked. UDP notifications were suddenly working.

This issue was recreated in 2 other instances where the machines did not go through our in-house MDT steps in Windows Deployment. We’re scratching our heads as to what may be the cause of this as all steps customising the OS were disabled with the exception of built-in scripts running within MDT.

This may be already a case of TL;DR however I am reaching out in this forum for any ideas on what we could try to fix this communication issue.

In short, we’ve built one virtual machine and ran it through the MDT build process steps- this is failing UDP communication.

Built another VM (using same ISO used in MDT) however installed Windows manually without running it through MDT - this is communicating properly.

When comparing the two, I could not find any differences in NIC settings or firewall - complete mind boggle. We are at the point where it’s presumed that MDT built-in scripts are customising Windows in an unfavourable way, but at this point it’s guess work.